Why I need a postgreSQL database

My wine recommender app requires up-to-date prices and inventory of wine at the LCBO. Queries to thousands of bottles and reviews must be fast too. PostgreSQL has a very fast response time and can service my recommender app very quickly.

In this blog, I will share how to:

- Create multiple tables and table relationships

- Populate tables for the first time

- Update tables with changes

Designing PSQL Tables

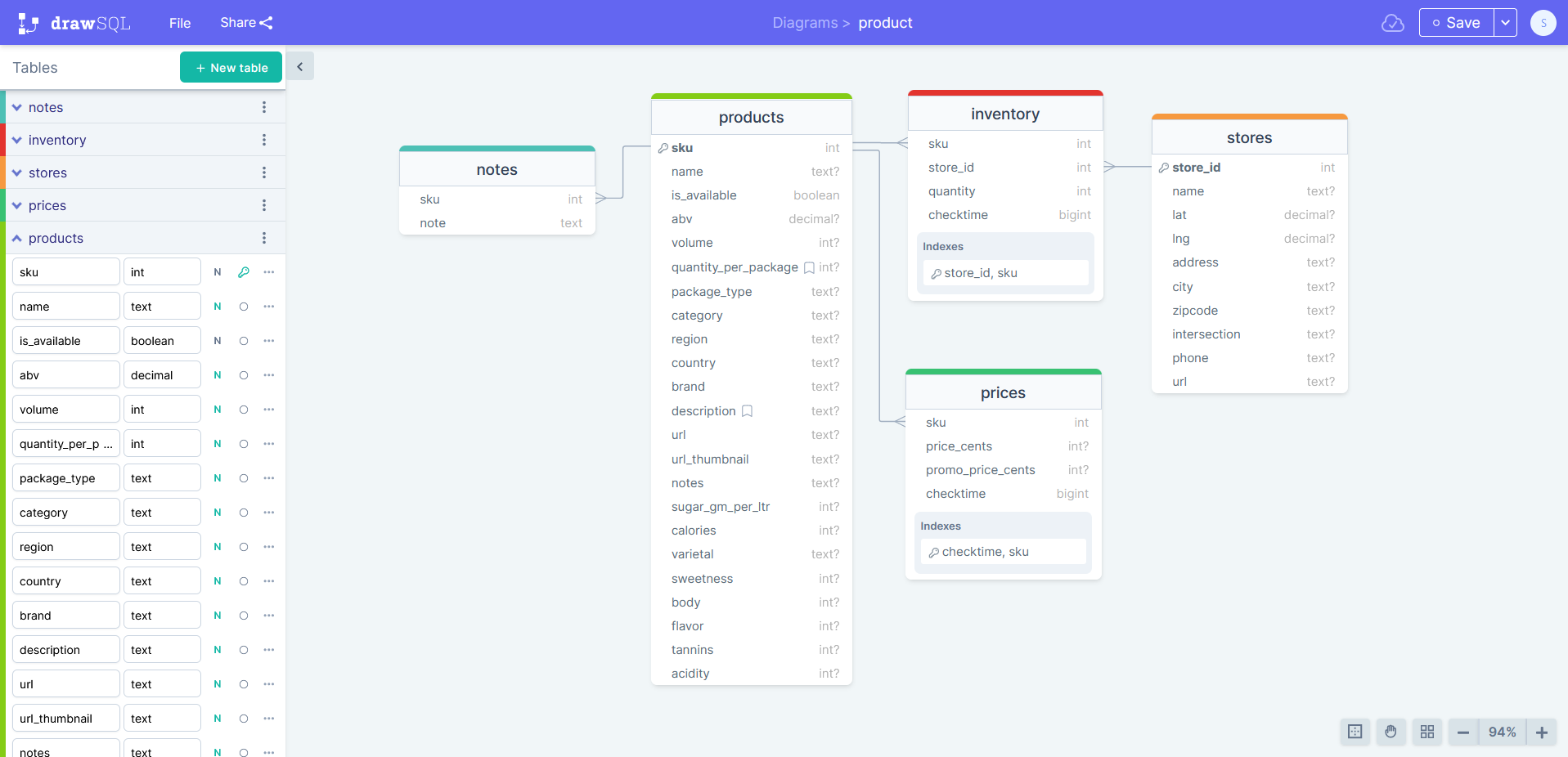

There are three sets of data collected from the LCBO about stores, products, and product inventory. Most of the data features are static or rarely changing. Dynamic features are the prices and inventory. As a result, I organize the data into five tables:

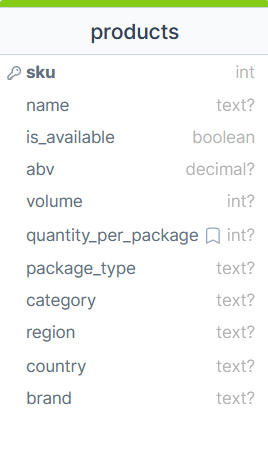

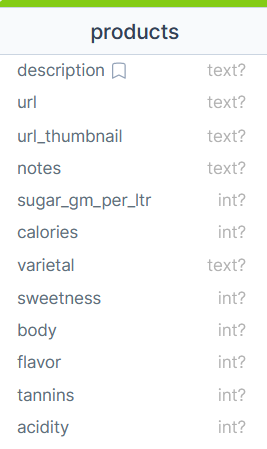

- products (static): description, volume, ABV, etc.

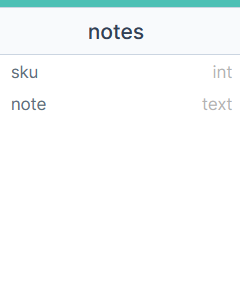

- notes (static): flavours, descriptors

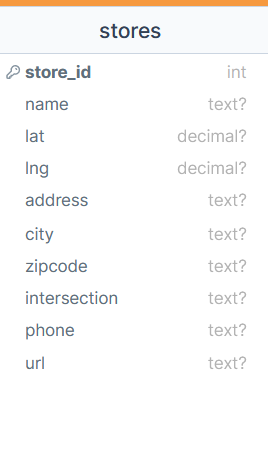

- stores (static): hours, location, address, etc.

- prices (dynamic): price, promo price, last updated

- inventory (dynamic): quantity, last updated

Table details

The LCBO assigns a unique identifier for each store and product, store_id and sku number. These identifiers are the primary keys for each row of data. Below is a snapshot of the column names, data types, and nullability (?) in both tables.

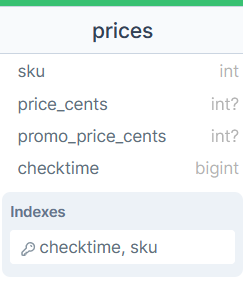

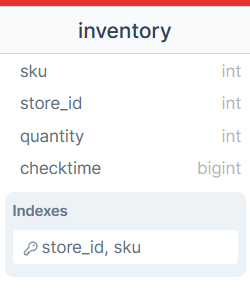

I want to keep track of the price history of a product and only make updates when a price change has been noticed. The prices table uses a composite primary key of two columns, sku and checktime. checktime is the UNIX time for when the row was inserted into the table.

Unlike the prices table, the inventory history is not recorded. This could be changed by creating a composite primary key of three columns. However, this could generate a lot of data that I do not currently need for my app.

Table relationships

The lines connecting the tables indicate the relationships between them. The prices nad notes use composite primary keys derived from products and stores. The inventory has no primary keys but does require the sku value must exist in products.

These tables were designed using DrawSQL. You can freely download the PSQL commands to create these tables in your database. Here is a copy of the script:

-- 1. STORES

CREATE TABLE "stores"(

"store_id" INTEGER NOT NULL,

"name" TEXT NULL,

"lat" DECIMAL(8, 2) NULL,

"lng" DECIMAL(8, 2) NULL,

"address" TEXT NULL,

"city" TEXT NULL,

"zipcode" TEXT NULL,

"intersection" TEXT NULL,

"phone" TEXT NULL,

"url" TEXT NULL

);

ALTER TABLE

"stores" ADD PRIMARY KEY("store_id");

-- 2. products

CREATE TABLE "products"(

"sku" INTEGER NOT NULL,

"name" TEXT NULL,

"is_available" BOOLEAN NOT NULL,

"abv" DECIMAL(8, 2) NULL,

"volume" INTEGER NULL,

"quantity_per_package" INTEGER NULL DEFAULT '1',

"package_type" TEXT NULL,

"category" TEXT NULL,

"region" TEXT NULL,

"country" TEXT NULL,

"brand" TEXT NULL,

"description" TEXT NULL DEFAULT 'No description available.',

"url" TEXT NULL,

"url_thumbnail" TEXT NULL,

"notes" TEXT NULL,

"sugar_gm_per_ltr" INTEGER NULL,

"calories" INTEGER NULL,

"varietal" TEXT NULL,

"sweetness" INTEGER NULL,

"body" INTEGER NULL,

"flavor" INTEGER NULL,

"tannins" INTEGER NULL,

"acidity" INTEGER NULL

);

ALTER TABLE

"products" ADD PRIMARY KEY("sku");

-- 3. PRICES

CREATE TABLE "prices"(

"sku" INTEGER NOT NULL,

"price_cents" INTEGER NULL,

"promo_price_cents" INTEGER NULL,

"checktime" BIGINT NOT NULL

);

ALTER TABLE

"prices" ADD PRIMARY KEY("sku", "checktime");

ALTER TABLE

"prices" ADD CONSTRAINT "prices_sku_foreign" FOREIGN KEY("sku") REFERENCES "products"("sku");

-- 4. INVENTORY

CREATE TABLE "inventory"(

"sku" INTEGER NOT NULL,

"store_id" INTEGER NOT NULL,

"quantity" INTEGER NOT NULL,

"checktime" BIGINT NOT NULL

);

ALTER TABLE

"inventory" ADD PRIMARY KEY("store_id", "sku");

ALTER TABLE

"inventory" ADD CONSTRAINT "inventory_store_id_foreign" FOREIGN KEY("store_id") REFERENCES "stores"("store_id");

ALTER TABLE

"inventory" ADD CONSTRAINT "inventory_sku_foreign" FOREIGN KEY("sku") REFERENCES "products"("sku");

-- 5. NOTES

CREATE TABLE "notes"(

"sku" INTEGER NOT NULL,

"note" TEXT NOT NULL

);

ALTER TABLE

"notes" ADD CONSTRAINT "notes_sku_foreign" FOREIGN KEY("sku") REFERENCES "products"("sku");Note, you can delete a table with the simple command:

DROP TABLE tablename;Make sure to first delete tables that have keys or indices that are derivative of other tables (i.e., tables with foreign keys).

Populating tables with data

We need to define frequently used PSQL functions in python before populating tables. The psycopg2 python module is a PSQL adapter. In short, it permits you to query your PSQL database from a python environment.

Basic Python Functions for PSQL

One-way Query:

The following is a barebones one-way query tool that requires the PSQL address and the query to send. I use this to drop and re-create a table, for example. Do not forget the .commit() to execute your query.

import psycopg2

def query(sql_address, query):

connection = psycopg2.connect(sql_address)

cur = connection.cursor()

cur.execute(query)

connection.commit()

connection.close()Table Query:

The following can return table queries such as “SELECT * FROM products LIMIT 10;”. The return is a list of tuples and the column name for each element in the tuple.

import psycopg2

def query_table(sql_address, query):

connection = psycopg2.connect(sql_address)

cur = connection.cursor()

cur.execute(query)

columnName = [desc[0] for desc in cur.description]

tbl = cur.fetchall()

connection.close()

return tbl, columnName Insert Table Rows:

Here is a function that inserts rows of data to my PSQL database (ref). It has a nice catch for SQL exceptions.

The arguments require the PSQL address, the table name, and column names. records is the data represented as a list of tuples where each tuple’s elements are defined and ordered identically to the list of column names, columns.

Note, psycopg2 has multiple solutions for inserting rows and some are much faster than others (by orders of magnitude). I found psycopg2.extras.execute_values to be the fastest.

import psycopg2

import psycopg2.extras

def bulkInsert(sql_address, tableName, records, columns):

try:

connection = psycopg2.connect(sql_address)

cursor = connection.cursor()

tableColumns = list(columns)

sql_insert_query = f"""

INSERT INTO {tableName} ({', '.join(tableColumns)})

VALUES %s

"""

result = psycopg2.extras.execute_values(cursor, sql_insert_query, records)

connection.commit()

print(cursor.rowcount, f"Record inserted successfully into {tableName} table")

except (Exception, psycopg2.Error) as error:

print("Error while updating PostgreSQL table", error)

finally:

# closing database connection.

if connection:

cursor.close()

connection.close()

print("PostgreSQL connection is closed")Here is an example of how to insert multiple rows with the above code.

sql_address = "postgres://USERNAME:PASSWORD@SERVER/DATABASE"

tablename = "products"

data = [

('La Pamplemousse', 750, 14.5, 19.99),

('Bargain Red Wine', 750, 14.0, 26.99),

]

columns = ['name', 'volume', 'abv', 'price']

bulkInsert(sql_address, tablename, data, columns)The bulkInsert function should suffice for inserting rows of data into a table. If I obtain a new product to insert into the table, I can use query_table to obtain the existing list to ensure the product is indeed new. A simple python solution for products is:

skuOldList = query_table(sql_address, "select sku from products;")

skuList = read_from_data_files()

skuNewList = set(skuList) - set(skuOldList)Creating a Price History Table

I only insert the price if it has changed in value. I need to create a table from our prices table the most recent prices to make a comparison. This could be done using two approaches: temporary tables and views. Choosing which depends on the context and query complexity. The SQL commands are identical except for TEMP TABLE and VIEW.

--CREATE VIEW price_temp AS

CREATE TEMP TABLE temp_prices AS

select sku, price_cents, promo_price_cents, checktime from prices

inner join (

select sku as sku2, max(checktime) as checktime2 from prices

group by sku

) as max_checktime

on sku = sku2

and checktime = checktime2

;Temporary tables exist until the connection to the database is closed. The data added to the table can be updated or changed. It does not depend on original table(s) after creation. This is a more memory intensive process.

Views exist only for one query! Each time the table is called upon, it is regenerated and can differ if the original tables change values (i.e., the view table is always current). This is a more computing intensive process.

The views solution is not suitable for my task because:

- It can be completed in one query

- A single product is considered only once (so, regeneration is not necessary)

- My server has low CPU speeds but plenty of memory

- The task is executed on GitHub Actions. So, using views with low database CPU speeds with views would waste computing time on GitHub actions

- My data is relatively small in memory (~45 MB).

After creating a temporary table, the price data is inserted using the following sample code. Suppose I checked a product and find $15.00 and $10.00 are the regular and promotional prices. If either of these values differ from the last price entry then we need to insert the change.

INSERT into prices (sku, price_cents, promo_price_cents, checktime)

select 10101, 1499, 999, 1686005186

where not exists (

select checktime from temp_prices where

1499 = price_cents and

999 = promo_price_cents and

1686005186 >= checktime and

10101 = sku

)

;Conclusion

This is the final step for storing data generated from a scraper bot in a postgreSQL database. With the blog guides about how to scrape and creating a bot, you can create your own automated database too! I hope these guides can streamline your workflow for creating your datasets.

Thank you!

Remember to always scrape gently :)

Citation

@online{ro2023,

author = {Ro, Stephen},

title = {DIY {Wine} {Database} with {postgreSQL}},

date = {2023-07-01},

url = {https://royourboat.github.io/posts/2023-07-01-lcbo-psql/},

langid = {en}

}